Extended Common Load Modelling : example¶

Import the required modules

[1]:

%matplotlib inline

import openturns as ot

import oteclm

from openturns.viewer import View

We consider a common cause failure (CCF) groupe with n=7 identical and independent components. The total impact vector of this CCF group is estimated after N=1002100 demands or tests on the group.

[2]:

n = 7

vectImpactTotal = ot.Indices(n+1)

vectImpactTotal[0] = 1000000

vectImpactTotal[1] = 2000

vectImpactTotal[2] = 200

vectImpactTotal[3] = 30

vectImpactTotal[4] = 20

vectImpactTotal[5] = 5

vectImpactTotal[6] = 0

vectImpactTotal[7] = 0

Create the ECLM class. We will use the Gauss Legendre quadrature algorithm to compute all the integrals of the ECLM model. The use of 50 points is sufficicient to reach a good precision.

[3]:

myECLM = oteclm.ECLM(vectImpactTotal, ot.GaussLegendre([50]))

Estimate the optimal parameter

We use the Mankamo assumption. We use the maximum likelihood estimators of the Mankamo parameter. We want to get all the graphs of the likelihood function at the optimal Mankamo parameter.

We start by verifying that our starting point for the optimization algorithm verifies the constraints!

[4]:

startingPoint = [5.0e-3, 0.51, 0.85]

print(myECLM.verifyMankamoConstraints(startingPoint))

False

If the point is not valid, we can ask for a valid one by giving .

[5]:

startingPoint = myECLM.computeValidMankamoStartingPoint(0.7)

startingPoint

[5]:

[0.00018494,0.35,0.7]

Anyway, if the starting point is not valid, the function estimateMaxLikelihoodFromMankamo will automatically change it by itself.

[6]:

visuLikelihood = True

mankamoParam, generalParam, finalLogLikValue, graphesCol = myECLM.estimateMaxLikelihoodFromMankamo(startingPoint, visuLikelihood, verbose=False)

print('Mankamo parameter : ', mankamoParam)

print('general parameter : ', generalParam)

print('finalLogLikValue : ', finalLogLikValue)

Production of graphs

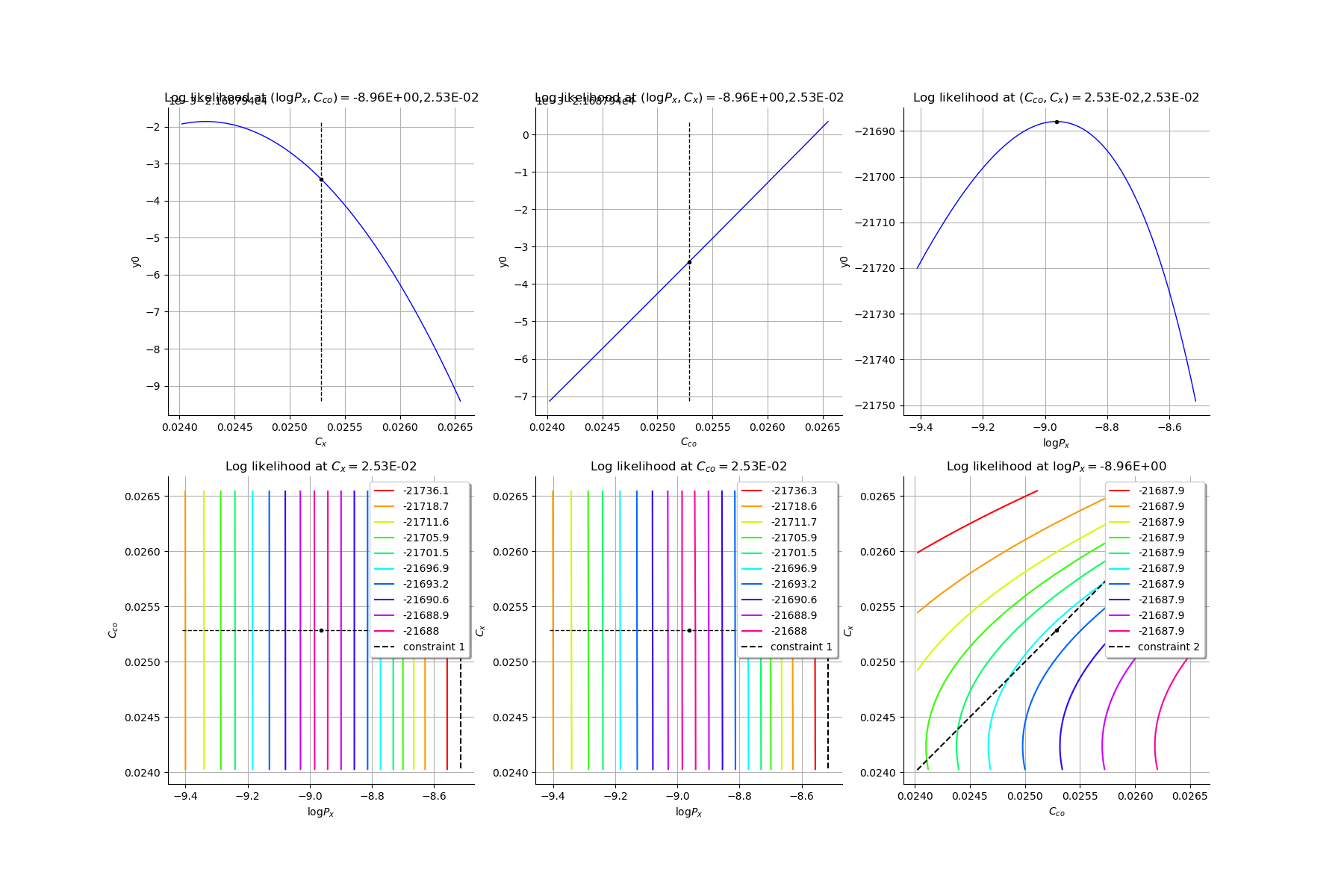

graph (Cco, Cx) = (Cco_optim, Cx_optim)

graph (logPx, Cx) = (logPx_optim, Cx_optim)

graph (logPx, Cco) = (logPx_optim, Cco_optim)

graph Cx = Cx_optim

graph Cco = Cco_optim

graph logPx = logPx_optim

Mankamo parameter : [0.00036988020585009376, 0.00012801168928232786, 0.025285764581599247, 0.02528576558159925]

general parameter : [0.9992086008496488, 0.04557096775442347, 0.045570968678919084, 0.2829364243530039, 0.7170635756469961]

finalLogLikValue : -21687.94341532473

[8]:

gl = ot.GridLayout(2,3)

for i in range(6):

gl.setGraph(i//3, i%3, graphesCol[i])

gl

[8]:

Compute the ECLM probabilities

[9]:

PEG_list = myECLM.computePEGall()

print('PEG_list = ', PEG_list)

print('')

PSG_list = myECLM.computePSGall()

print('PSG_list = ', PSG_list)

print('')

PES_list = myECLM.computePESall()

print('PES_list = ', PES_list)

print('')

PTS_list = myECLM.computePTSall()

print('PTS_list = ', PTS_list)

PEG_list = [0.99775848489789, 0.00028415414547984774, 8.363578290642434e-06, 1.7370202481206713e-06, 3.9410426875976355e-07, 9.648993284764975e-08, 2.5440409734895204e-08, 7.210078132552946e-09]

PSG_list = [0.9999999999999789, 0.00036988020585009104, 2.2089033674126945e-05, 4.001348637317756e-06, 7.671053746399512e-07, 1.5458083044999307e-07, 3.265048786744815e-08, 7.210078132552946e-09]

PES_list = [0.99775848489789, 0.001989079018358934, 0.00017563514410349112, 6.07957086842235e-05, 1.3793649406591724e-05, 2.0262885898006447e-06, 1.7808286814426643e-07, 7.210078132552946e-09]

PTS_list = [0.9999999999999792, 0.002241515102089318, 0.00025243608373038377, 7.680093962689269e-05, 1.6005230942669187e-05, 2.211581536077464e-06, 1.8529294627681937e-07, 7.210078132552946e-09]

Generate a sample of the parameters by Bootstrap

We use the bootstrap sampling to get a sample of total impact vectors. Each total impact vector value is associated to an optimal Mankamo parameter and an optimal general parameter. We fix the size of the bootstrap sample. We also fix the number of realisations after which the sample is saved. Each optimisation problem is initalised with the optimal parameter found for the total impact vector.

The sample is generated and saved in a csv file.

[10]:

Nbootstrap = 100

blockSize = 256

[11]:

startingPoint = mankamoParam[1:4]

fileNameSampleParam = 'sampleParamFromMankamo_{}.csv'.format(Nbootstrap)

myECLM.estimateBootstrapParamSampleFromMankamo(Nbootstrap, startingPoint, blockSize, fileNameSampleParam)

Create the sample of all the ECLM probabilities associated to the sample of the parameters.

[12]:

fileNameECLMProbabilities = 'sampleECLMProbabilitiesFromMankamo_{}.csv'.format(Nbootstrap)

myECLM.computeECLMProbabilitiesFromMankano(blockSize, fileNameSampleParam, fileNameECLMProbabilities)

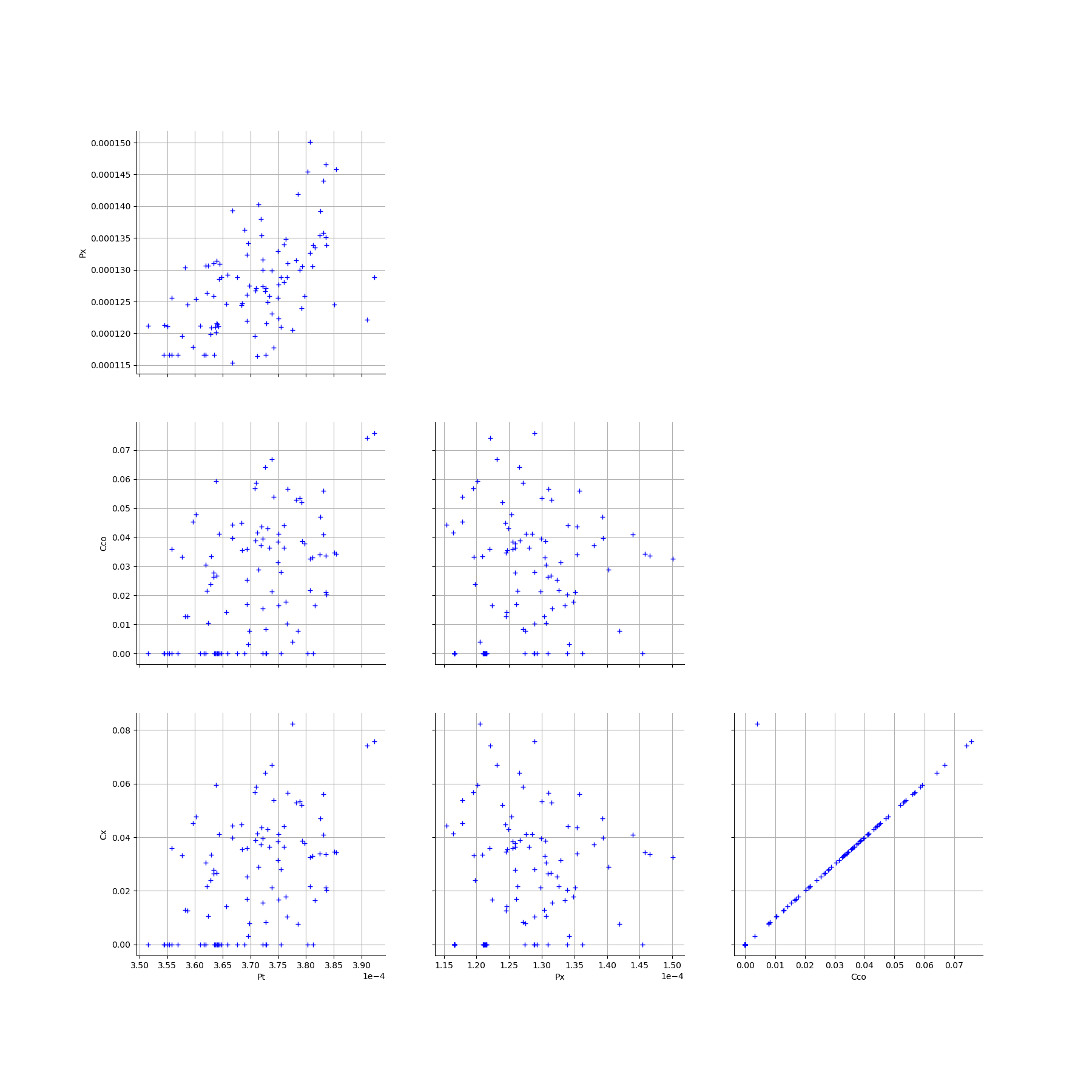

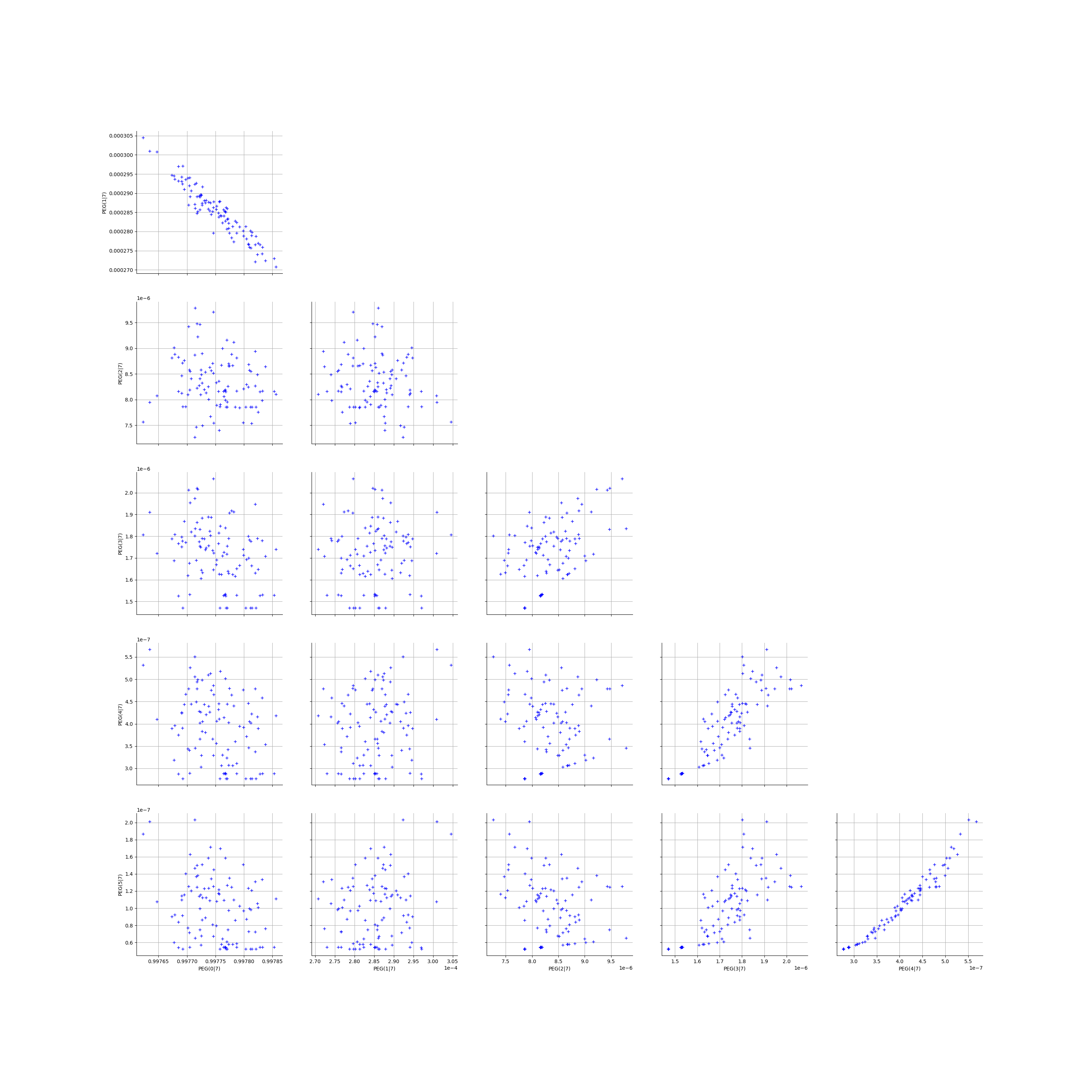

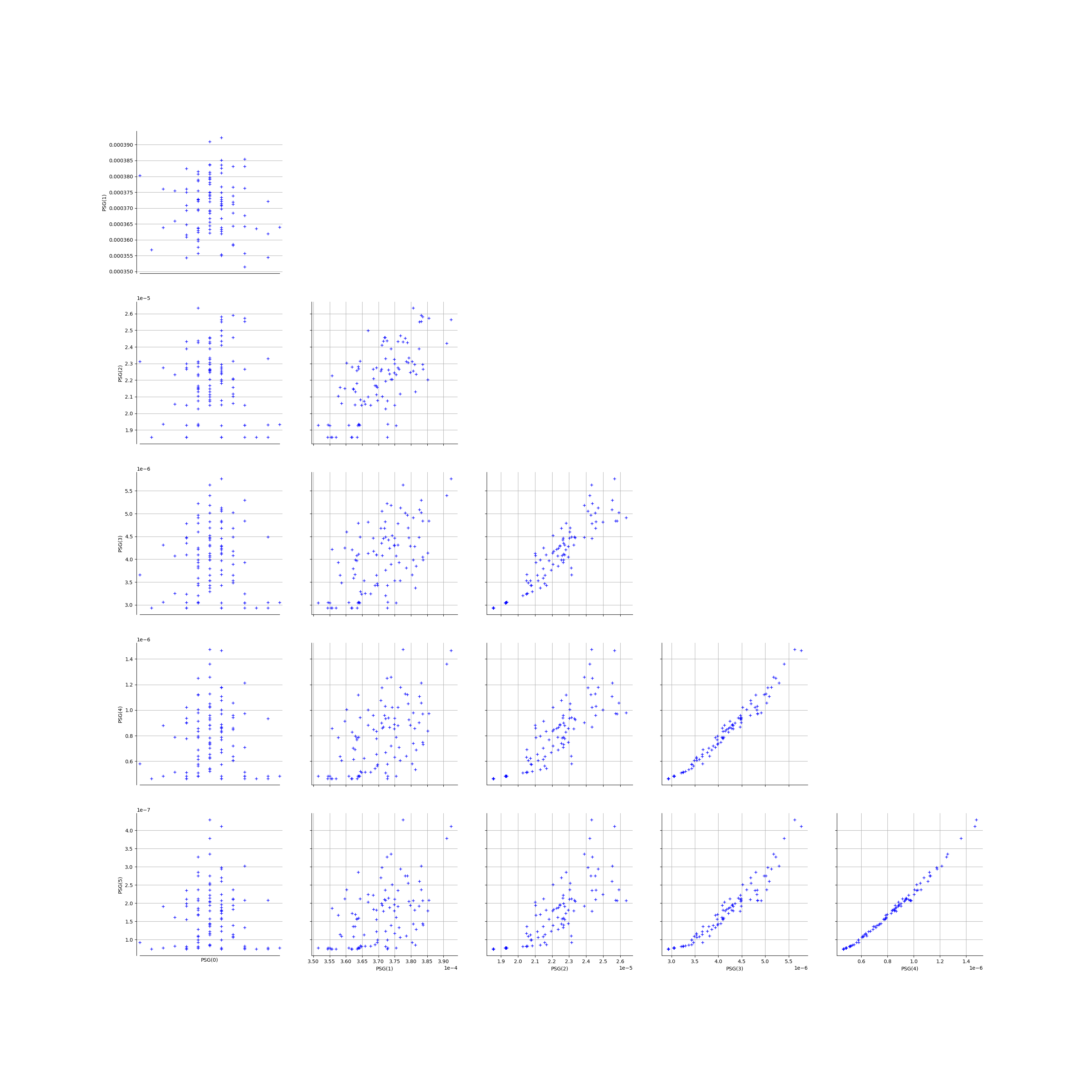

Graphically analyse the bootstrap sample of parameters

We create the Pairs graphs of the Mankamo and general parameters.

[13]:

graphPairsMankamoParam, graphPairsGeneralParam, graphMarg_list, descParam = myECLM.analyseGraphsECLMParam(fileNameSampleParam)

[14]:

graphPairsMankamoParam

[14]:

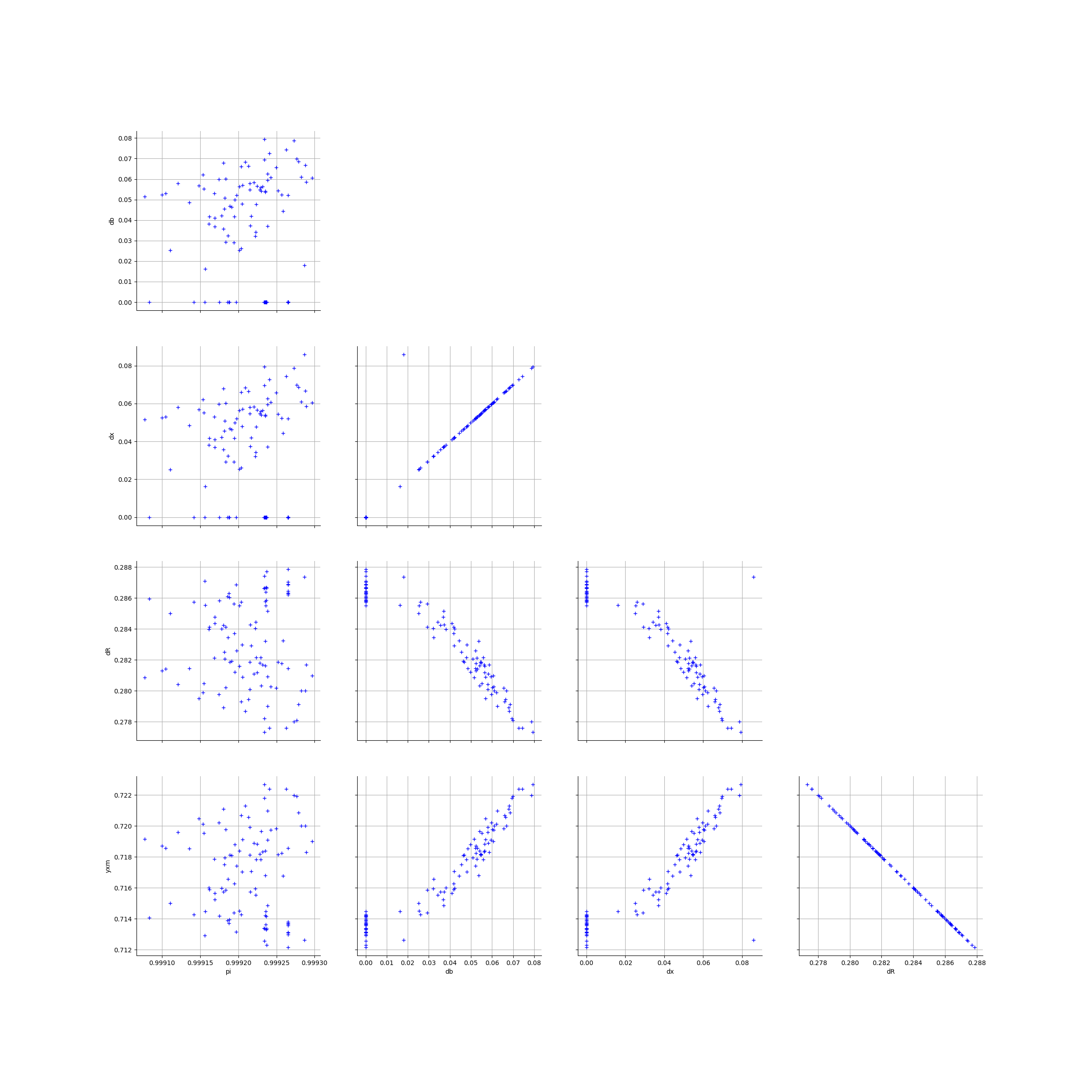

[15]:

graphPairsGeneralParam

[15]:

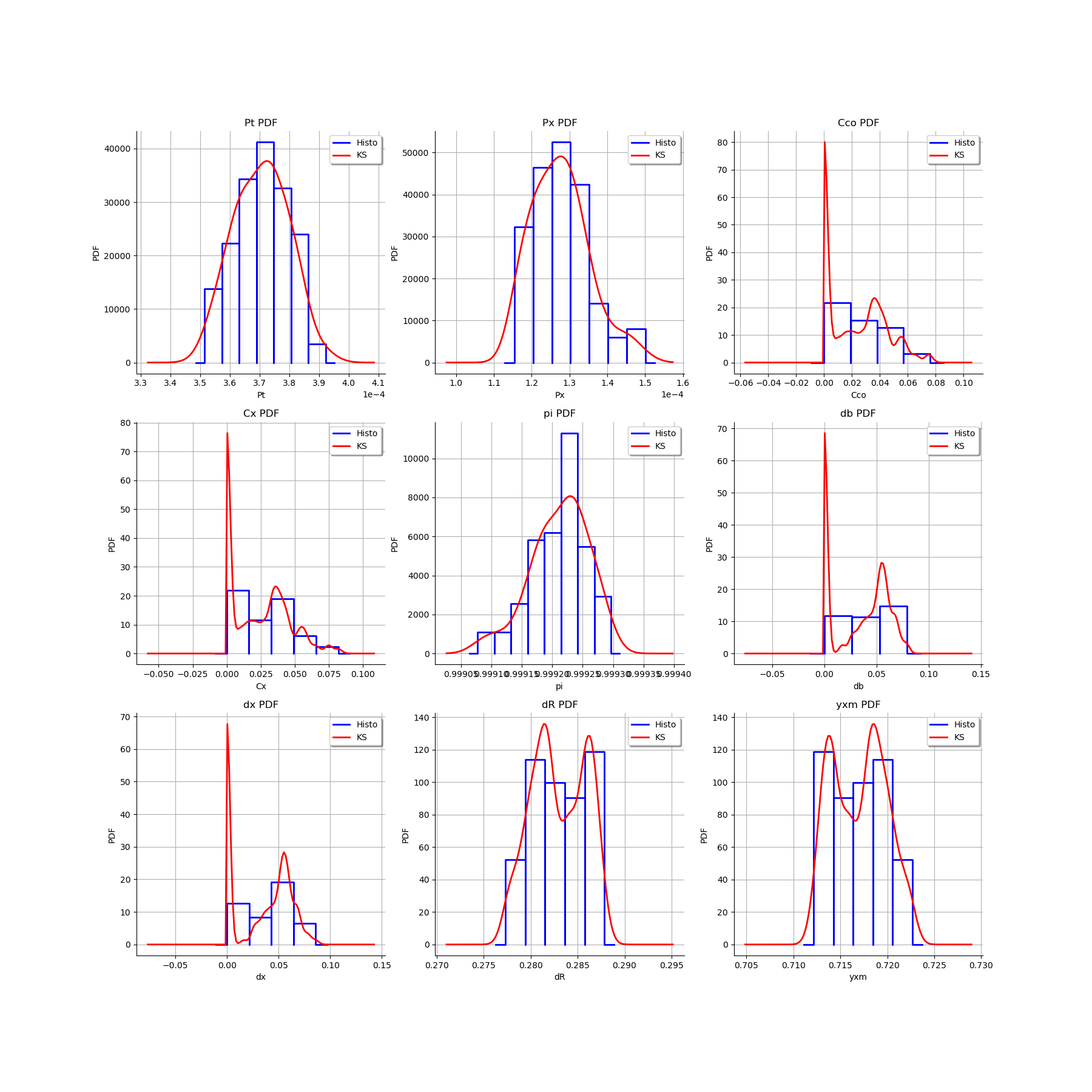

We estimate the distribution of each parameter with a Histogram and a normal kernel smoothing.

[16]:

gl = ot.GridLayout(3,3)

for k in range(len(graphMarg_list)):

gl.setGraph(k//3, k%3, graphMarg_list[k])

gl

[16]:

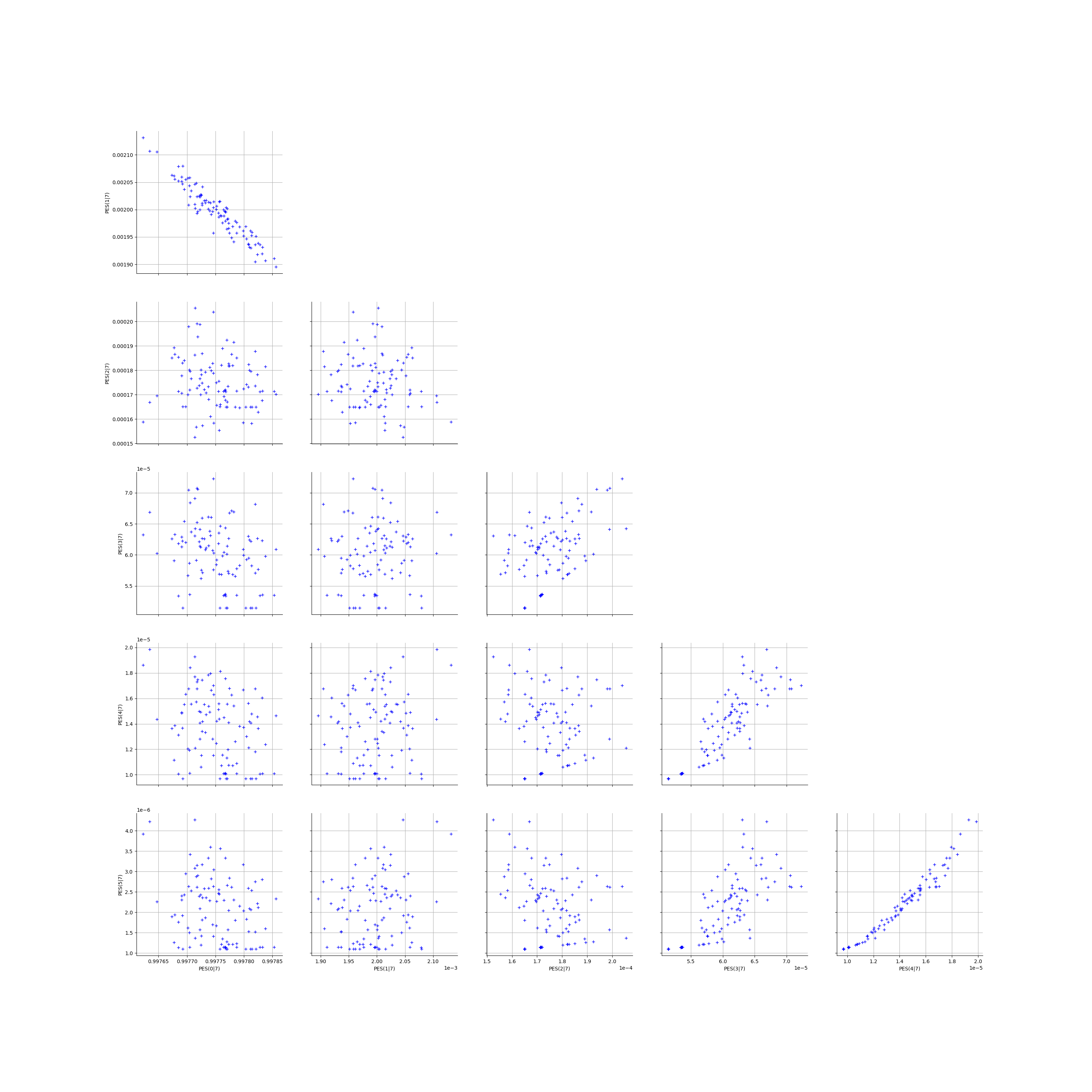

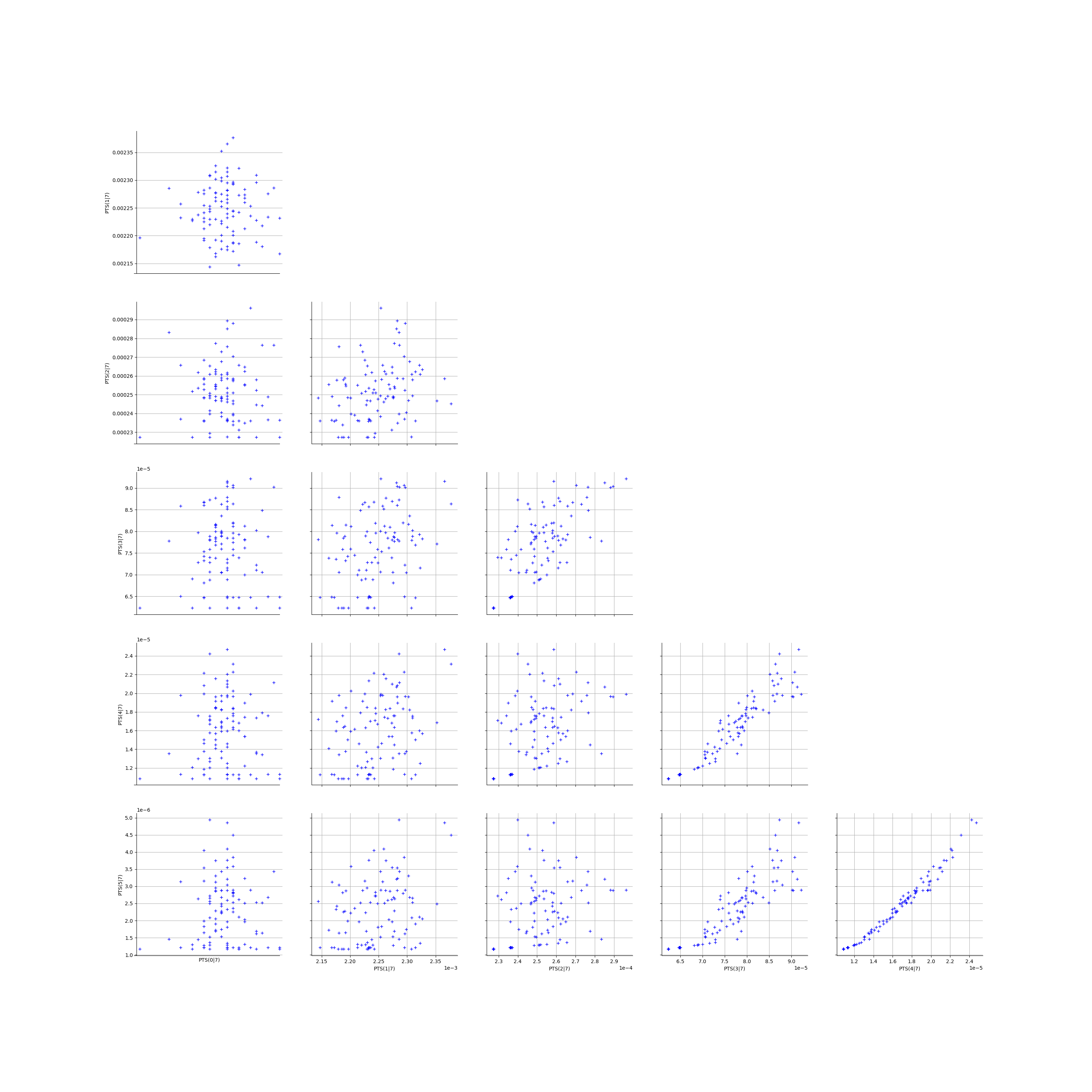

Graphically analyse the bootstrap sample of the ECLM probabilities

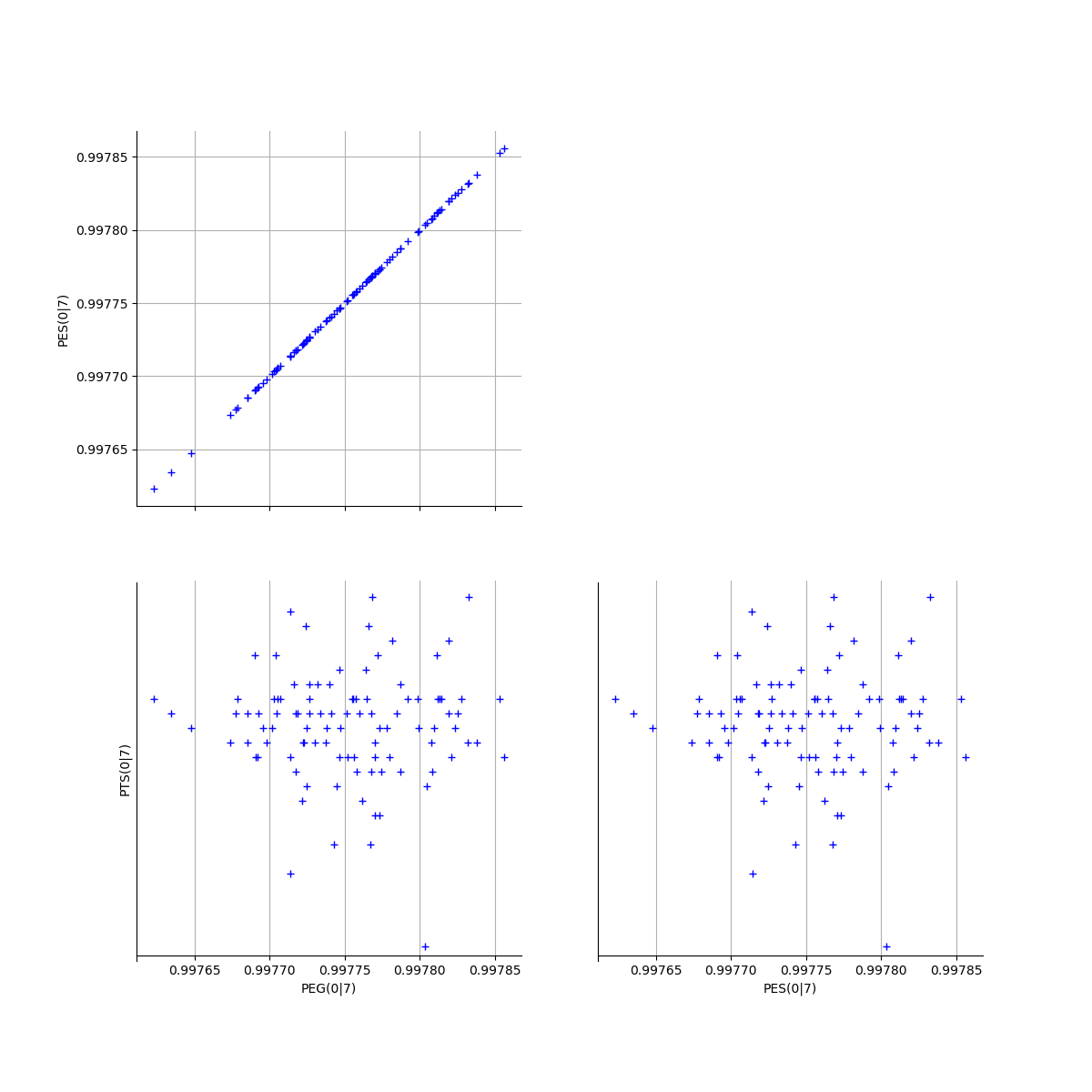

We create the Pairs graphs of all the ECLM probabilities. We limit the graphical study to the multiplicities lesser than .

[17]:

kMax = 5

graphPairs_list, graphPEG_PES_PTS_list, graphMargPEG_list, graphMargPSG_list, graphMargPES_list, graphMargPTS_list, desc_list = myECLM.analyseGraphsECLMProbabilities(fileNameECLMProbabilities, kMax)

[18]:

descPairs = desc_list[0]

descPEG_PES_PTS = desc_list[1]

descMargPEG = desc_list[2]

descMargPSG = desc_list[3]

descMargPES = desc_list[4]

descMargPTS = desc_list[5]

[19]:

graphPairs_list[0]

[19]:

[20]:

graphPairs_list[1]

[20]:

[21]:

graphPairs_list[2]

[21]:

[22]:

graphPairs_list[3]

[22]:

[23]:

# Fix a k <=kMax

k = 0

graphPEG_PES_PTS_list[k]

[23]:

[24]:

len(graphMargPEG_list)

gl = ot.GridLayout(2,3)

for k in range(len(graphMargPEG_list)):

gl.setGraph(k//3, k%3, graphMargPEG_list[k])

gl

[24]:

[25]:

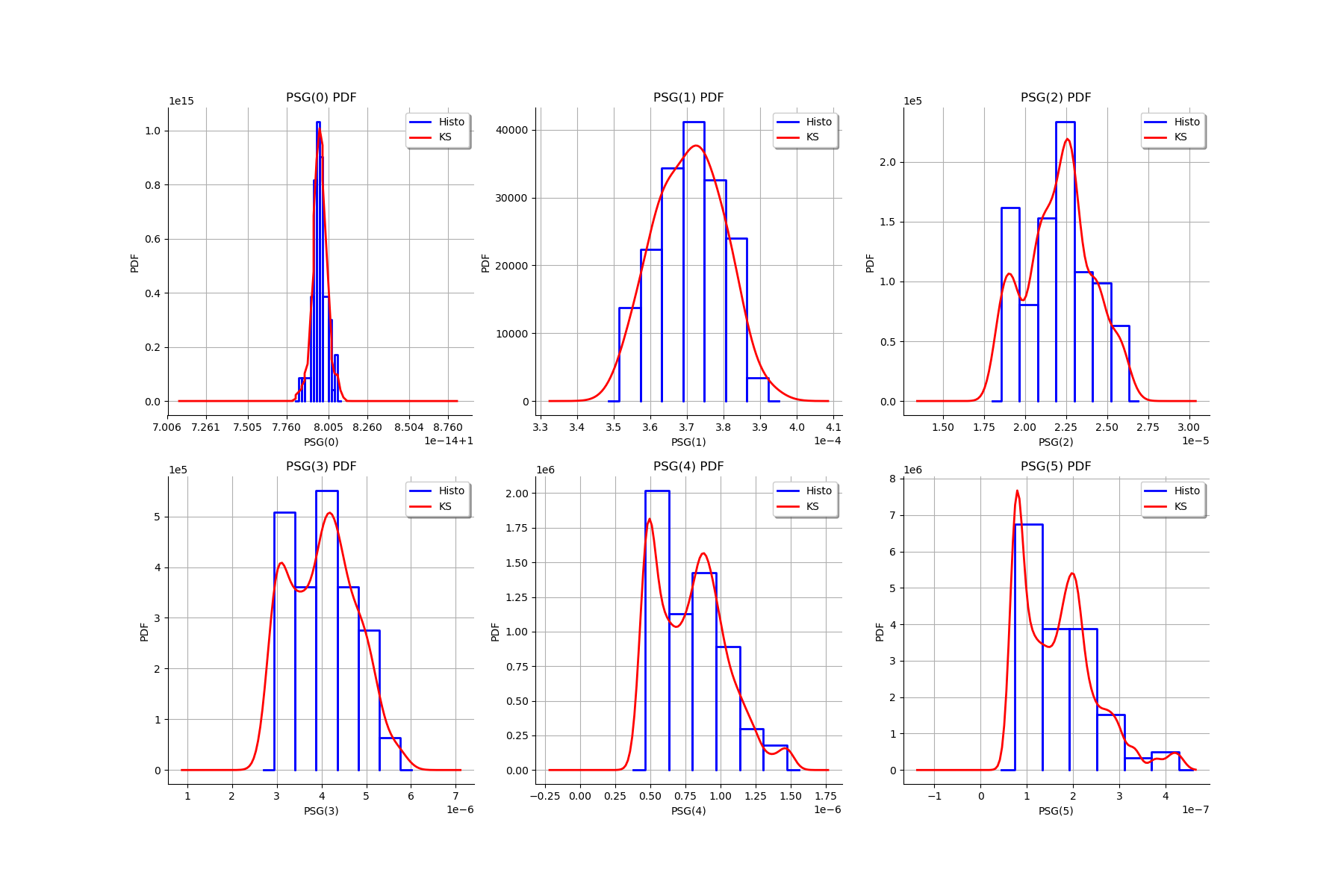

gl = ot.GridLayout(2,3)

for k in range(len(graphMargPSG_list)):

gl.setGraph(k//3, k%3, graphMargPSG_list[k])

gl

[25]:

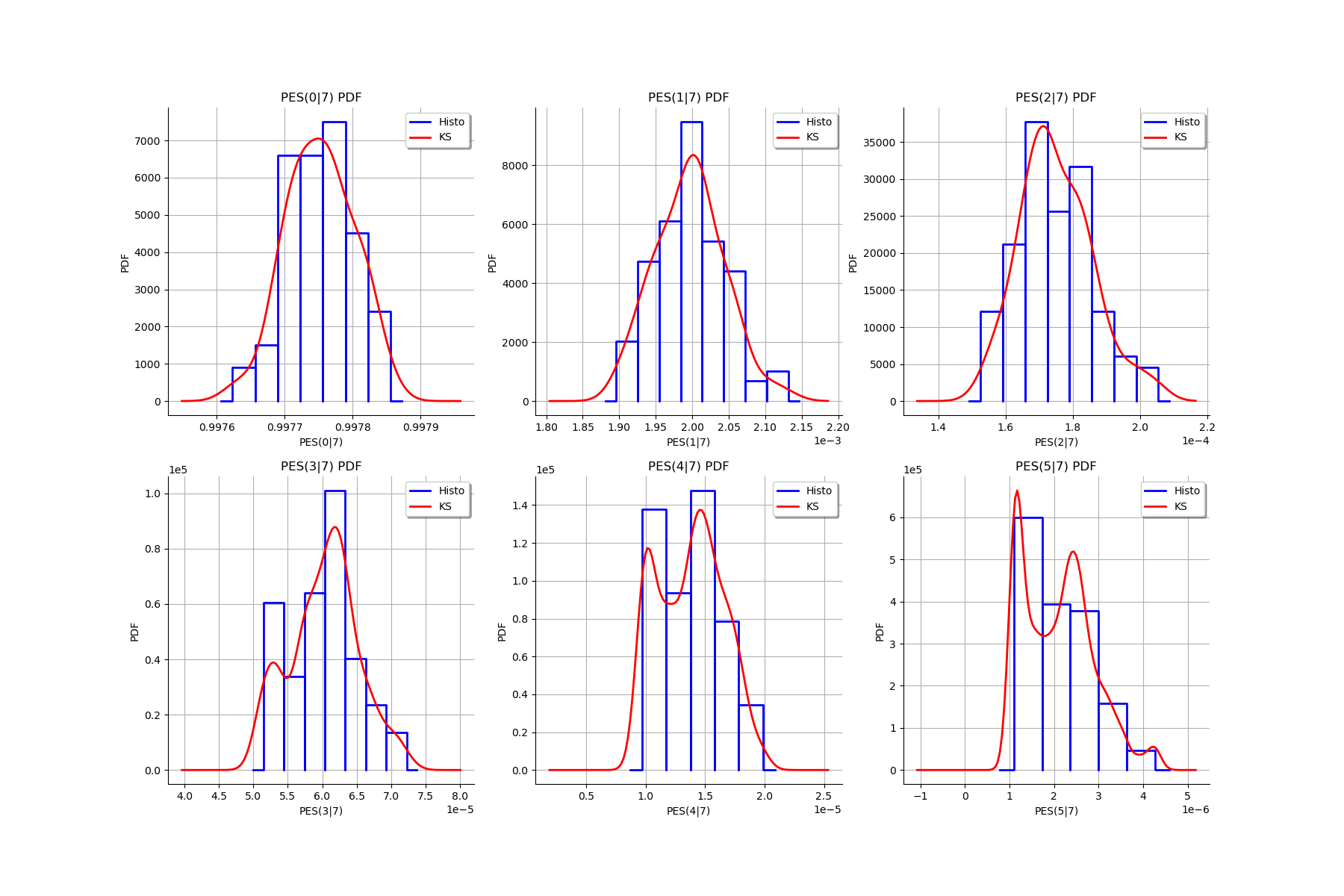

[26]:

gl = ot.GridLayout(2,3)

for k in range(len(graphMargPES_list)):

gl.setGraph(k//3, k%3, graphMargPES_list[k])

gl

[26]:

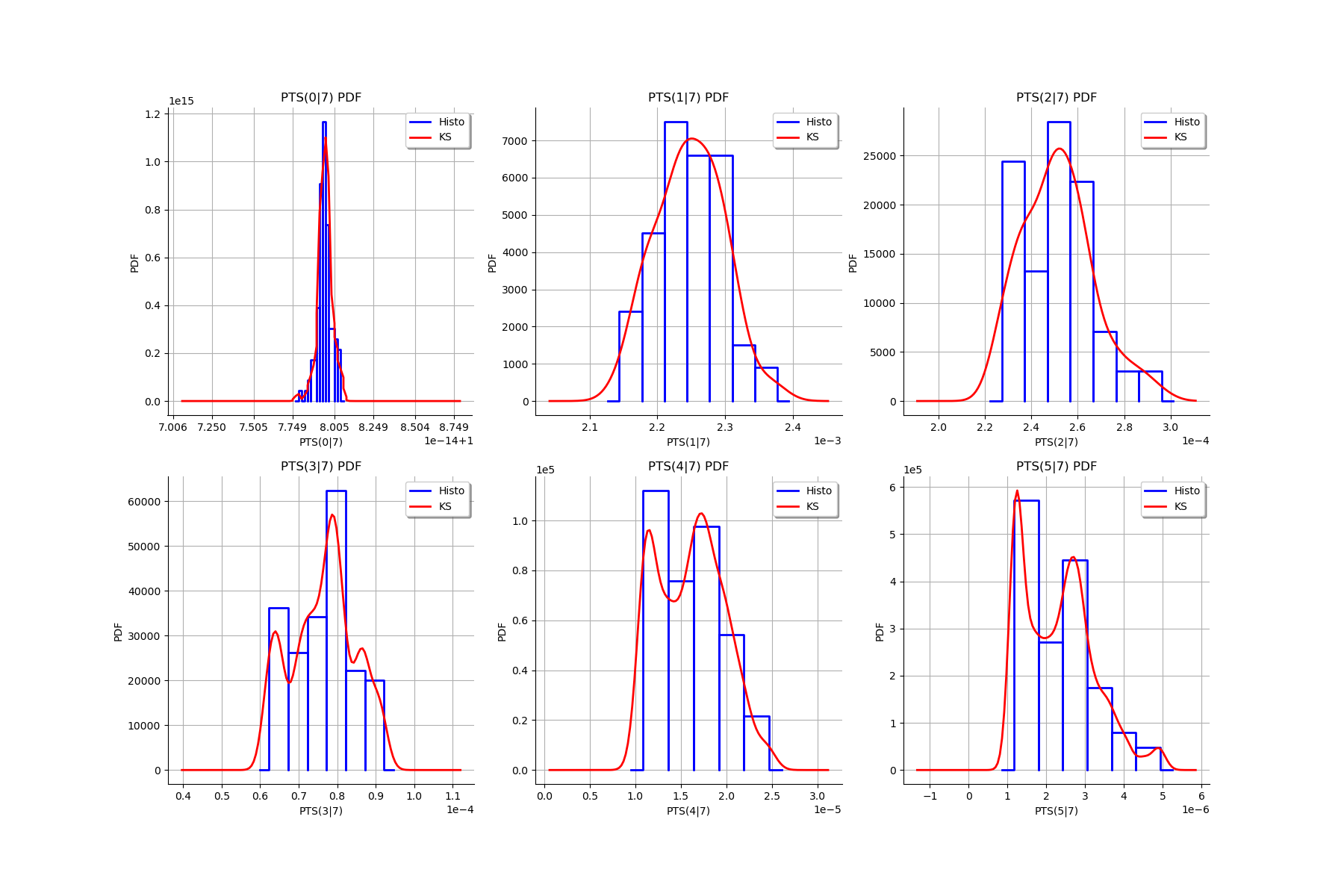

[27]:

gl = ot.GridLayout(2,3)

for k in range(len(graphMargPTS_list)):

gl.setGraph(k//3, k%3, graphMargPTS_list[k])

gl

[27]:

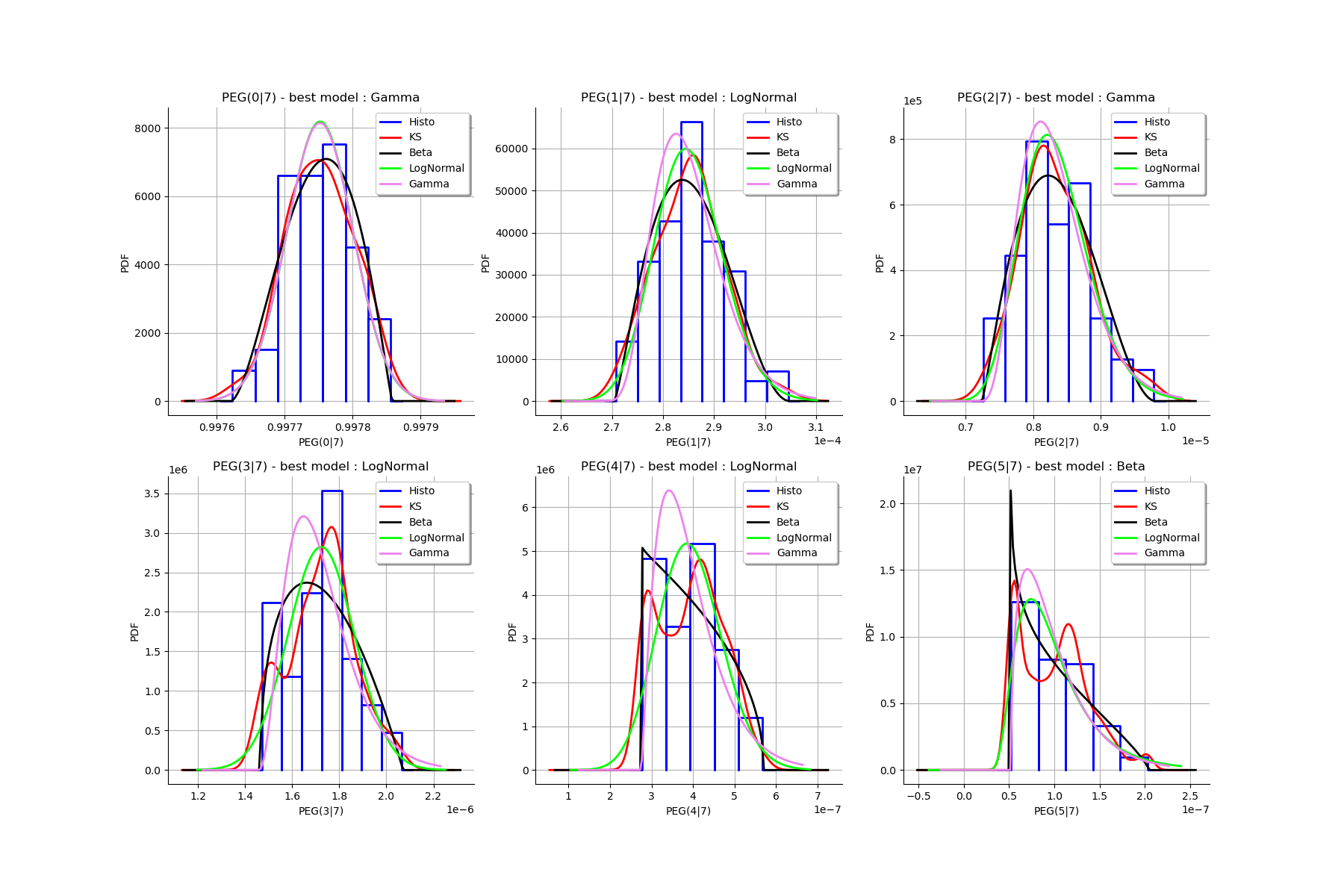

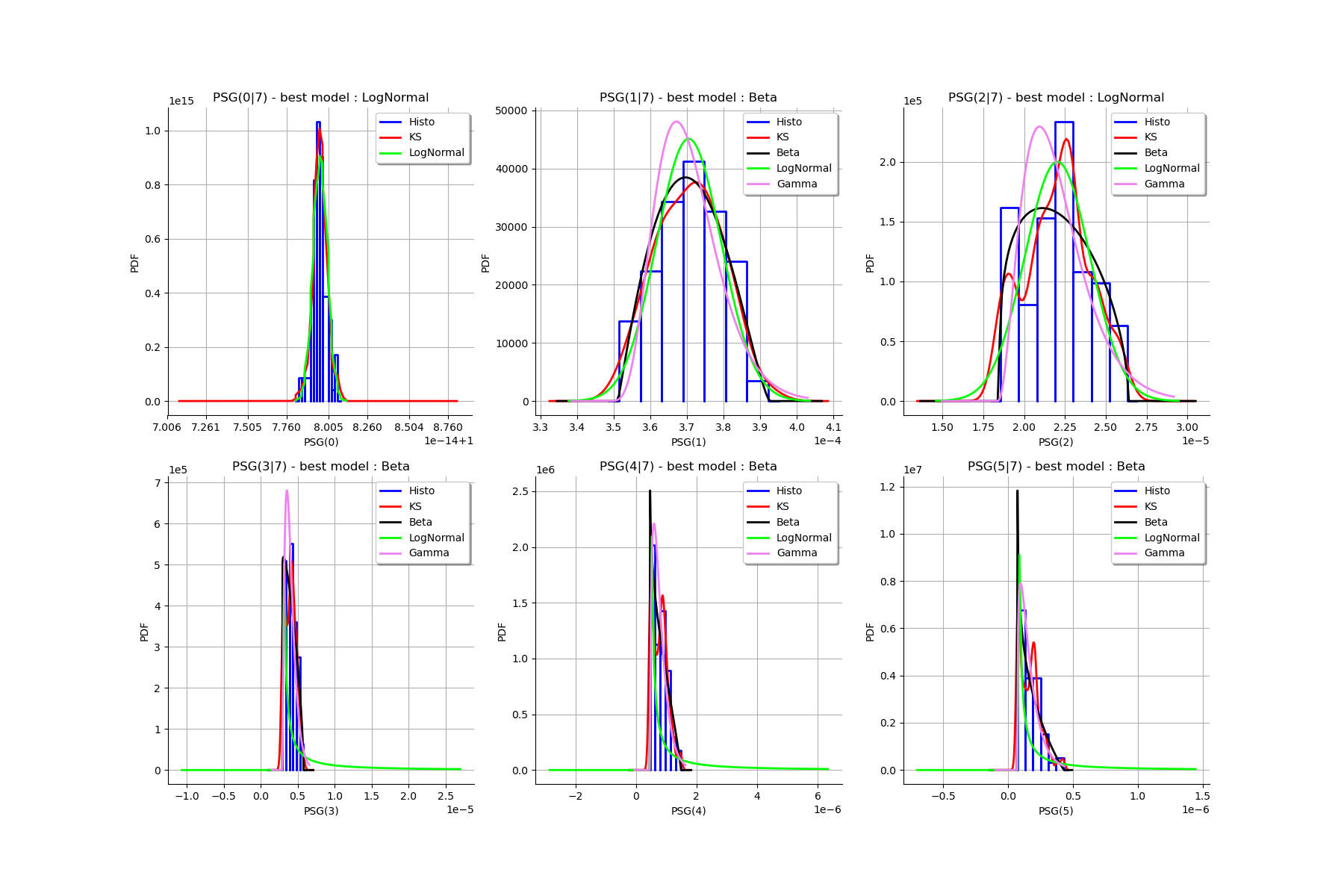

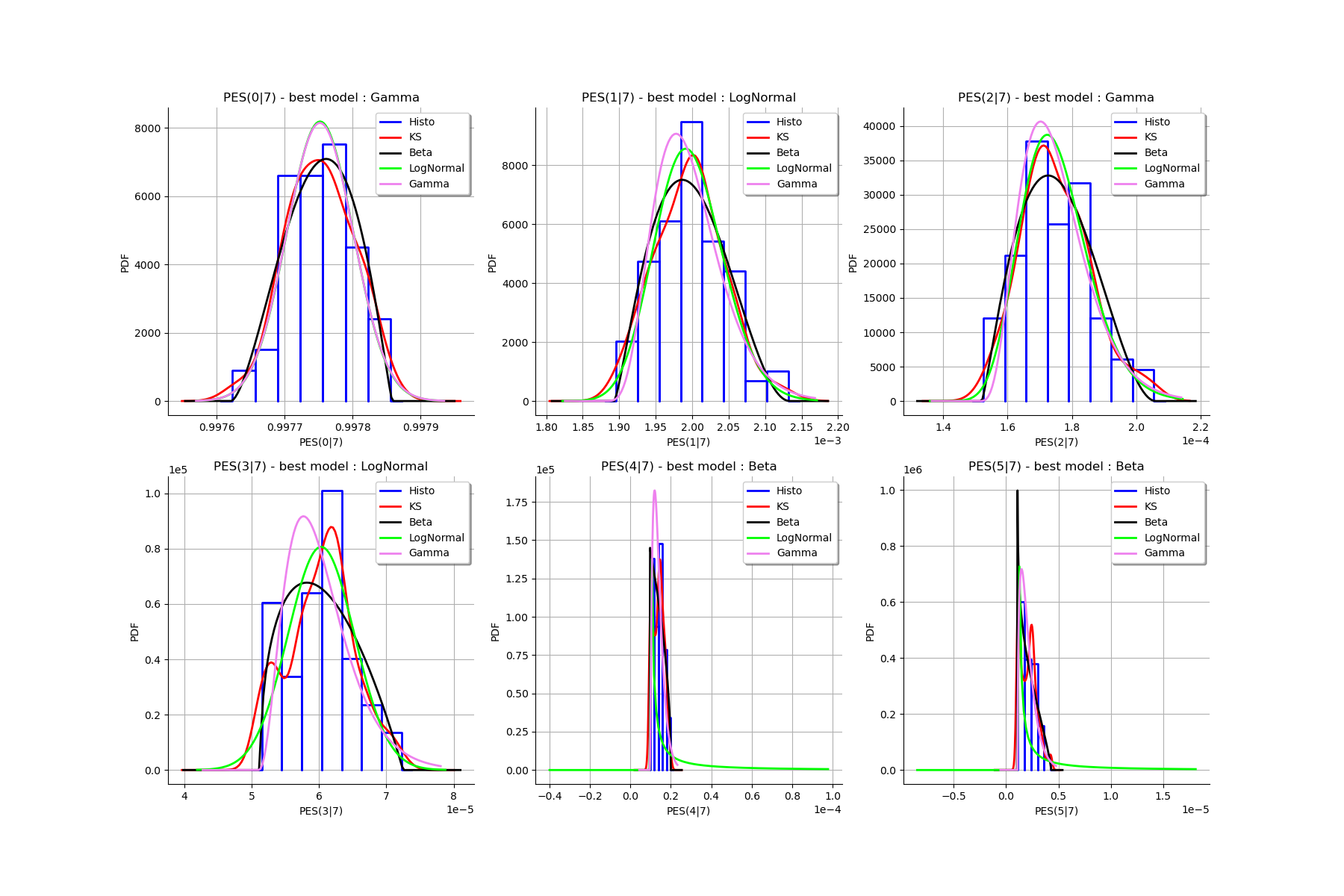

Fit a distribution to the ECLM probabilities

We fit a distribution among a given list to each ECLM probability. We test it with the Lilliefors test. We also compute the confidence interval of the specified level.

[28]:

factoryColl = [ot.BetaFactory(), ot.LogNormalFactory(), ot.GammaFactory()]

confidenceLevel = 0.9

IC_list, graphMarg_list, descMarg_list = myECLM.analyseDistECLMProbabilities(fileNameECLMProbabilities, kMax, confidenceLevel, factoryColl)

IC_PEG_list, IC_PSG_list, IC_PES_list, IC_PTS_list = IC_list

graphMargPEG_list, graphMargPSG_list, graphMargPES_list, graphMargPTS_list = graphMarg_list

descMargPEG, descMargPSG, descMargPES, descMargPTS = descMarg_list

Test de Lilliefors

==================

Ordre k= 0

Best model PEG( 0 |n) : Gamma(k = 40897.7, lambda = 4.12706e+06, gamma = 0.987842) p-value = 0.6171742808798646

Best model PSG( 0 |n) : LogNormal(muLog = -26.0328, sigmaLog = 8.89975e-05, gamma = 1) p-value = 0.20976491862567812

Best model PES( 0 |n) : Gamma(k = 40897.7, lambda = 4.12706e+06, gamma = 0.987842) p-value = 0.6026655560183257

Best model PTS( 0 |n) : LogNormal(muLog = -25.5223, sigmaLog = 5.38921e-05, gamma = 1) p-value = 0.055714285714285716

Test de Lilliefors

==================

Ordre k= 1

Best model PEG( 1 |n) : LogNormal(muLog = -9.34084, sigmaLog = 0.0760285, gamma = 0.00019719) p-value = 0.4729241877256318

Best model PSG( 1 |n) : Beta(alpha = 2.0922, beta = 2.36032, a = 0.000351093, b = 0.000392658) p-value = 0.6588921282798834

Best model PES( 1 |n) : LogNormal(muLog = -7.39493, sigmaLog = 0.0760285, gamma = 0.00138033) p-value = 0.47678142514011207

Best model PTS( 1 |n) : LogNormal(muLog = -6.71331, sigmaLog = 0.0401047, gamma = 0.00103231) p-value = 0.34352836879432624

Test de Lilliefors

==================

Ordre k= 2

Best model PEG( 2 |n) : Gamma(k = 5.00199, lambda = 4.37185e+06, gamma = 7.19275e-06) p-value = 0.5361736334405146

Best model PSG( 2 |n) : LogNormal(muLog = -3.89603, sigmaLog = 9.80732e-05, gamma = -0.0203003) p-value = 0.03296703296703297

Best model PES( 2 |n) : Gamma(k = 5.00199, lambda = 208183, gamma = 0.000151048) p-value = 0.5518354175070593

Best model PTS( 2 |n) : LogNormal(muLog = -9.63268, sigmaLog = 0.225222, gamma = 0.000184233) p-value = 0.07892107892107893

Test de Lilliefors

==================

Ordre k= 3

Best model PEG( 3 |n) : LogNormal(muLog = -9.1857, sigmaLog = 0.00137902, gamma = -0.000100772) p-value = 0.023976023976023976

Best model PSG( 3 |n) : Beta(alpha = 1.04361, beta = 1.66447, a = 2.90721e-06, b = 5.79377e-06) p-value = 0.000999000999000999

Best model PES( 3 |n) : LogNormal(muLog = -5.63481, sigmaLog = 0.00138518, gamma = -0.00351105) p-value = 0.022977022977022976

Best model PTS( 3 |n) : Beta(alpha = 1.12833, beta = 1.266, a = 6.20545e-05, b = 9.24656e-05) p-value = 0.00999000999000999

Test de Lilliefors

==================

Ordre k= 4

Best model PEG( 4 |n) : LogNormal(muLog = -13.6852, sigmaLog = 0.0678263, gamma = -7.48635e-07) p-value = 0.008991008991008992

Best model PSG( 4 |n) : Beta(alpha = 0.875666, beta = 1.8344, a = 4.55741e-07, b = 1.48579e-06) p-value = 0.005994005994005994

Best model PES( 4 |n) : Beta(alpha = 0.9944, beta = 1.48326, a = 9.60656e-06, b = 1.99598e-05) p-value = 0.000999000999000999

Best model PTS( 4 |n) : LogNormal(muLog = -13.3255, sigmaLog = 3.24704, gamma = 1.08751e-05) p-value = 0.07892107892107893

Test de Lilliefors

==================

Ordre k= 5

Best model PEG( 5 |n) : Beta(alpha = 0.829708, beta = 1.77852, a = 5.08153e-08, b = 2.04672e-07) p-value = 0.007992007992007992

Best model PSG( 5 |n) : Beta(alpha = 0.766877, beta = 2.10588, a = 7.03955e-08, b = 4.32479e-07) p-value = 0.06893106893106893

Best model PES( 5 |n) : Beta(alpha = 0.829708, beta = 1.77852, a = 1.06712e-06, b = 4.29812e-06) p-value = 0.003996003996003996

Best model PTS( 5 |n) : Beta(alpha = 0.818929, beta = 1.86304, a = 1.1321e-06, b = 4.97738e-06) p-value = 0.007992007992007992

[29]:

for k in range(len(IC_PEG_list)):

print('IC_PEG_', k, ' = ', IC_PEG_list[k])

for k in range(len(IC_PSG_list)):

print('IC_PSG_', k, ' = ', IC_PSG_list[k])

for k in range(len(IC_PES_list)):

print('IC_PES_', k, ' = ', IC_PES_list[k])

for k in range(len(IC_PTS_list)):

print('IC_PTS_', k, ' = ', IC_PTS_list[k])

IC_PEG_ 0 = [0.997666, 0.997839]

IC_PEG_ 1 = [0.000273448, 0.000297217]

IC_PEG_ 2 = [7.49916e-06, 9.33129e-06]

IC_PEG_ 3 = [1.47195e-06, 1.97351e-06]

IC_PEG_ 4 = [2.69732e-07, 5.22866e-07]

IC_PEG_ 5 = [4.86228e-08, 1.66726e-07]

IC_PSG_ 0 = [1, 1]

IC_PSG_ 1 = [0.000354533, 0.000386447]

IC_PSG_ 2 = [1.85701e-05, 2.55343e-05]

IC_PSG_ 3 = [2.86014e-06, 5.27513e-06]

IC_PSG_ 4 = [4.39048e-07, 1.23337e-06]

IC_PSG_ 5 = [6.54125e-08, 3.16607e-07]

IC_PES_ 0 = [0.997666, 0.997839]

IC_PES_ 1 = [0.00191414, 0.00208052]

IC_PES_ 2 = [0.000157483, 0.000195957]

IC_PES_ 3 = [5.15183e-05, 6.90728e-05]

IC_PES_ 4 = [9.44105e-06, 1.83e-05]

IC_PES_ 5 = [1.01772e-06, 3.5026e-06]

IC_PTS_ 0 = [1, 1]

IC_PTS_ 1 = [0.00216064, 0.00233412]

IC_PTS_ 2 = [0.000226653, 0.00028162]

IC_PTS_ 3 = [6.2362e-05, 9.01619e-05]

IC_PTS_ 4 = [1.04958e-05, 2.22452e-05]

IC_PTS_ 5 = [1.0748e-06, 3.96103e-06]

We draw all the estimated distributions and the title gives the best model.

[30]:

gl = ot.GridLayout(2,3)

for k in range(len(graphMargPEG_list)):

gl.setGraph(k//3, k%3, graphMargPEG_list[k])

gl

[30]:

[31]:

gl = ot.GridLayout(2,3)

for k in range(len(graphMargPSG_list)):

gl.setGraph(k//3, k%3, graphMargPSG_list[k])

gl

[31]:

[32]:

gl = ot.GridLayout(2,3)

for k in range(len(graphMargPES_list)):

gl.setGraph(k//3, k%3, graphMargPES_list[k])

gl

[32]:

[33]:

gl = ot.GridLayout(2,3)

for k in range(len(graphMargPTS_list)):

gl.setGraph(k//3, k%3, graphMargPTS_list[k])

gl

[33]:

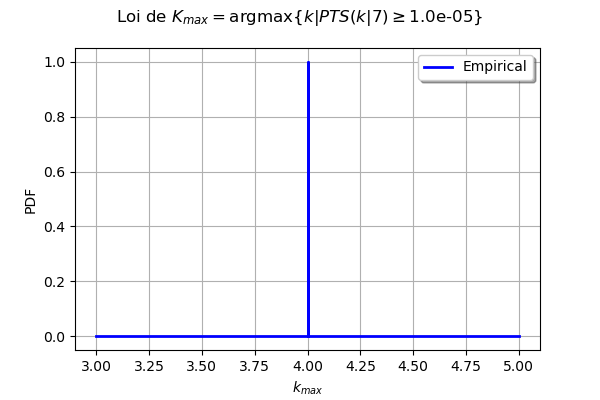

Analyse the minimal multiplicity which probability is greater than a given threshold

We fix p and we get the minimal multiplicity such that :

[34]:

p = 1.0e-5

nameSeuil = '10M5'

[35]:

kMax = myECLM.computeKMaxPTS(p)

print('kMax = ', kMax)

kMax = 4

Then we use the bootstrap sample of the Mankamo parameters to generate a sample of . We analyse the distribution of

: we estimate it with the empirical distribution and we derive a confidence interval of order

.

[36]:

fileNameSampleParam = 'sampleParamFromMankamo_{}.csv'.format(Nbootstrap)

fileNameSampleKmax = 'sampleKmaxFromMankamo_{}_{}.csv'.format(Nbootstrap, nameSeuil)

gKmax = myECLM.computeAnalyseKMaxSample(p, blockSize, fileNameSampleParam, fileNameSampleKmax)

Intervalle de confiance de niveau 90%: [ 4.0 , 4.0 ]

[37]:

gKmax

[37]:

[ ]:

oteclm

oteclm